Asserting cni-ipvlan-vpc-k8s: IPvlan overlay-free Kubernetes Networking in AWS

Lyft is overjoyed to philosophize the initial launch provide launch of our IPvlan-basically based CNI networking stack for working Kubernetes at scale in AWS.

cni-ipvlan-vpc-k8s affords a house of CNI and IPAM plugins implementing a easy, immediate, and low latency networking stack for working Kubernetes inner Virtual Non-public Clouds (VPCs) on AWS.

Background

These days Lyft runs in AWS with Envoy as our provider mesh nonetheless without utilizing containers in manufacturing. We employ a dwelling-grown, considerably bespoke stack to deploy our microservice architecture onto provider-assigned EC2 cases with auto-scaling teams that dynamically scale cases in accordance with load.

While this architecture has served us neatly for a desire of years, there are vital advantages to tantalizing toward a favorable and scalable launch provide container orchestration draw. Given our old work with Google and IBM to bring Envoy to Kubernetes, it must always restful be no surprise that we’re without warning tantalizing Lyft’s deplorable infrastructure substrate to Kubernetes.

We’re dealing with this trade as a two share migration — initially deploying Kubernetes clusters for native Kubernetes applications much like TensorFlow and Apache Flink, adopted by a migration of Lyft-native microservices the build aside Envoy is frail to unify a mesh that spans both the legacy infrastructure as neatly as Lyft companies working on Kubernetes. It’s severe that both Kubernetes-native companies as neatly as Lyft-native companies give you the chance to articulate and part records as top quality electorate. Networking these environments together desires to be low latency, high throughput, and easy to debug if concerns arise.

Kubernetes networking in AWS: historically a peek in tradeoffs

Deploying Kubernetes at scale on AWS will not be any longer a easy or plug wager. While great work within the team has been done to without concerns and hasty rush up minute clusters in AWS, till no longer too lengthy ago, there hasn’t been an instantaneous and evident direction to mapping Kubernetes networking necessities onto AWS VPC community primitives.

The most attention-grabbing direction assembly Kubernetes’ community requirement is to connect a /24 subnet to each node, providing an device over the one hundred ten Pod IPs desired to reach the default maximum of schedulable Pods per node. As nodes be a part of and proceed the cluster, a central VPC route desk is updated. Unfortunately, AWS’s VPC product has a default maximum of 50 non-propagated routes per route desk, which will seemingly be increased as much as a hard restrict of a hundred routes on the price of no doubt reducing community performance. This implies you’re successfully small to 50 Kubernetes nodes per VPC utilizing this form.

While pondering clusters higher than 50 nodes in AWS, you’ll hasty receive suggestions to employ more exotic networking suggestions much like overlay networks (IP in IP) and BGP for dynamic routing. All of these approaches add huge complexity to your Kubernetes deployment, successfully requiring you to house up and debug a custom utility outlined community stack working on prime of Amazon’s native VPC utility outlined community stack. Why would you trip an SDN on prime of an SDN?

Extra efficient solutions

After watching the AWS VPC documentation, the CNI spec, Kubernetes networking requirement documents, kube-proxy iptables magic, alongside with your whole so much of Linux community driver and namespace alternatives, it’s that that you just can presumably mediate to invent easy and easy CNI plugins which power native AWS community constructs to provide a compliant Kubernetes networking stack.

Lincoln Stoll’s k8s-vpcnet, and more no longer too lengthy ago, Amazon’s amazon-vpc-cni-k8s CNI stacks employ Elastic Network Interfaces (ENIs) and secondary non-public IPs to perform an overlay-free AWS VPC-native solutions for Kubernetes networking. While both of these solutions perform the the same deplorable aim of enormously simplifying the community complexity of deploying Kubernetes at scale on AWS, they fabricate no longer care for minimizing community latency and kernel overhead as share of implementing a compliant networking stack.

An extraordinarily easy and low-latency resolution

We developed our resolution utilizing IPvlan, bypassing the price of forwarding packets thru the default namespace to connect host ENI adapters to their Pod virtual adapters. We straight away tie host ENI adapters to Pods.

In IPVLAN — The Foundation, Mahesh Bandewar and Eric Dumazet talk about wanting an different decision to forwarding as a motivation for writing IPvlan:

Even supposing this resolution [forwarding packets from and to the default namespace] works on a functional foundation, the performance / packet payment anticipated from this setup is is much lesser since each packet that is going in or out is processed 2+ times on the community stack (2x Ingress + Egress or 2x Egress + Ingress). This is an high-quality rate to pay for.

We additionally wanted the draw to be host-local with minimal tantalizing substances and say; our community stack comprises no community companies or daemons. As AWS cases boot, CNI plugins talk with AWS networking APIs to provision community property for Pods.

Lyft’s community architecture for Kubernetes, a low level overview

The vital EC2 boot ENI with its vital non-public IP is frail as the IP address for the node. Our CNI plugins house up extra ENIs and non-public IPs on these ENIs to connect IP addresses to Pods.

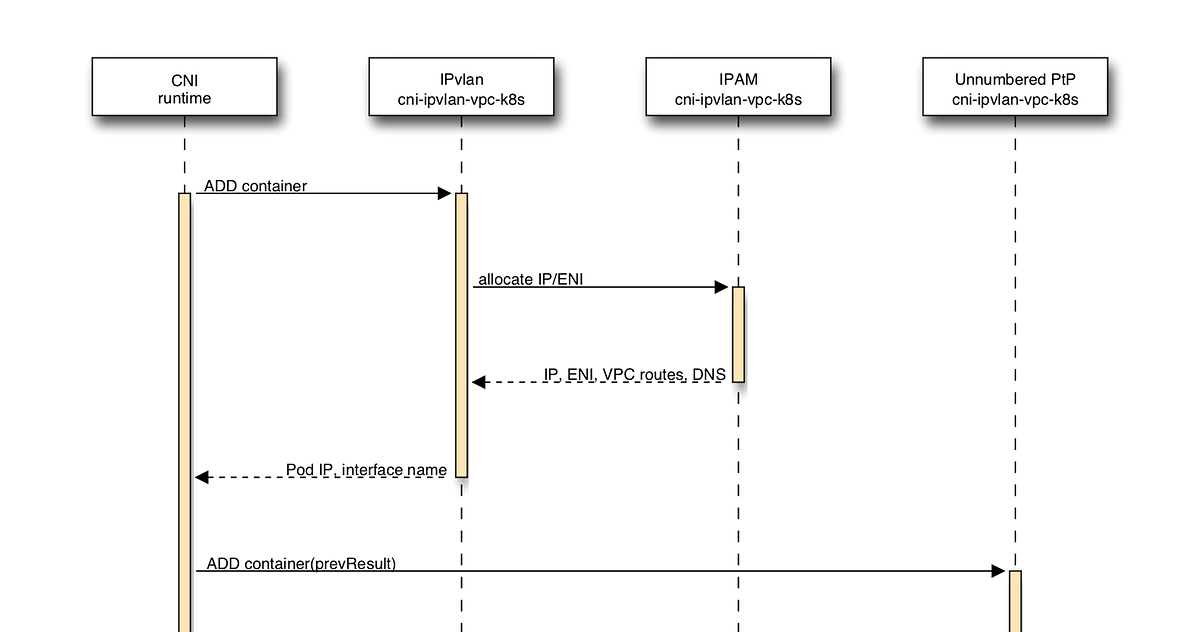

Every Pod comprises two community interfaces, a vital IPvlan interface and an unnumbered level-to-level virtual ethernet interface. These interfaces are created through a chained CNI execution.

- IPvlan interface: The IPvlan interface with the Pod’s IP is frail for all VPC web site web site visitors and affords minimal overhead for community packet processing actual thru the Linux kernel. The grasp utility is the ENI of the associated Pod IP. IPvlan is frail in L2 mode with isolation supplied from all diverse ENIs, together with the boot ENI dealing with web site web site visitors for the Kubernetes protect a watch on aircraft.

- Unnumbered level-to-level interface: A pair of virtual ethernet interfaces (veth) without IP addresses is frail to interconnect the Pod’s community namespace to the default community namespace. The interface is frail as the default route (non-VPC web site web site visitors) from the Pod, and extra routes are created on each aspect to order web site web site visitors between the node IP and the Pod IP over the hyperlink. For web site web site visitors sent over the interface, the Linux kernel borrows the IP address from the IPvlan interface for the Pod aspect and the boot ENI interface for the Kubelet aspect. Kubernetes Pods and nodes talk utilizing the the same infamous addresses in spite of which interface (IPvlan or veth) is frail for communication. This specific trick of “IP unnumbered configuration” is documented in RFC5309.

Info superhighway egress

For applications the build aside Pods wish to straight away talk with the Info superhighway, our stack can provide NAT web site web site visitors from the Pod over the main non-public IP of the boot ENI by surroundings the default path to the unnumbered level-to-level interface; this, in turn, allows making employ of Amazon’s Public IPv4 addressing attribute characteristic. When enabled, Pods can egress to the Info superhighway without wanting to protect a watch on Elastic IPs or NAT Gateways.

Host namespace interconnect

Kubelets and Daemon Sets hang high bandwidth, host-local secure entry to to all Pods working on the occasion — web site web site visitors doesn’t transit ENI gadgets. Offer and commute space IPs are the infamous Kubernetes addresses on both aspect of the connect.

- kube-proxy: We employ kube-proxy in iptables mode and it capabilities as anticipated, nonetheless with the caveat that Kubernetes Companies and products compare connections from a node’s provide IP slightly than the Pod’s provide IP as the netfilter rules are processed within the default namespace. This aspect fabricate is the same to Kubernetes habits below the userspace proxy. Since we’re optimizing for companies connecting during the Envoy mesh, this specific tradeoff hasn’t been a vital hassle.

- kube2iam: Visitors from Pods to the AWS Metadata provider transits over the unnumbered level-to-level interface to reach the default namespace earlier than being redirected through commute space NAT. The Pod’s provide IP is maintained as kube2iam runs as a conventional Daemon Web site.

VPC optimizations

Our secure is heavily optimized for intra-VPC web site web site visitors the build aside IPvlan is the staunch overhead between the occasion’s ethernet interface and the Pod community namespace. We bias toward web site web site visitors final actual thru the VPC and no longer transiting the IPv4 Info superhighway the build aside veth and NAT overhead is incurred. Unfortunately, many AWS companies require transiting the Info superhighway; on the opposite hand, both DynamoDB and S3 provide VPC gateway endpoints.

While we’ve no longer yet implemented IPv6 beef up in our CNI stack, we’ve plans to manufacture so within the advance future. IPv6 can fabricate employ of the IPvlan interface for both VPC web site web site visitors as neatly as Info superhighway web site web site visitors, attributable to AWS’s employ of public IPv6 addressing inner VPCs and beef up for egress-most attention-grabbing Info superhighway Gateways. NAT and veth overhead also can no longer be required for this web site web site visitors.

We’re planning emigrate to a VPC endpoint for DynamoDB and employ native IPv6 beef up for communication to S3. Biasing toward extremely low overhead IPv6 web site web site visitors with higher overhead for IPv4 Info superhighway web site web site visitors appears to be like love the staunch future direction.

Ongoing work and next steps

Our stack features a slightly modified upstream IPvlan CNI plugin, an unnumbered level-to-level CNI plugin, and an IPAM plugin that does the majority of the heavy lifting. We’ve opened a pull demand in opposition to the CNI plugins repo with the hope that we can unify the upstream IPvlan plugin functionality with our extra trade that allows the IPAM plugin to articulate support to the IPvlan driver the interface (ENI utility) containing the disbursed Pod IP address.

Searching together with IPv6 beef up, we’re shut to being characteristic full with our initial secure. We’re very drawn to hearing feedback on our CNI stack, and we’re hopeful the team will receive it a vital addition that encourages Kubernetes adoption on AWS. Please reach out to us through GitHub, email, or Gitter.

Thanks

cni-ipvlan-vpc-k8s is a workers effort combining engineering property from Lyft’s Infrastructure and Security teams. Special attributable to Yann Ramin who coauthored great of the code and Mike Cutalo who helped secure the discovering out infrastructure into form.

Attracted to working on Kubernetes? Lyft is hiring! Descend me a label on Twitter or at pfisher@lyft.com.

Read Extra

Commentaires récents