What attain made-for-AI processors in actual fact attain?

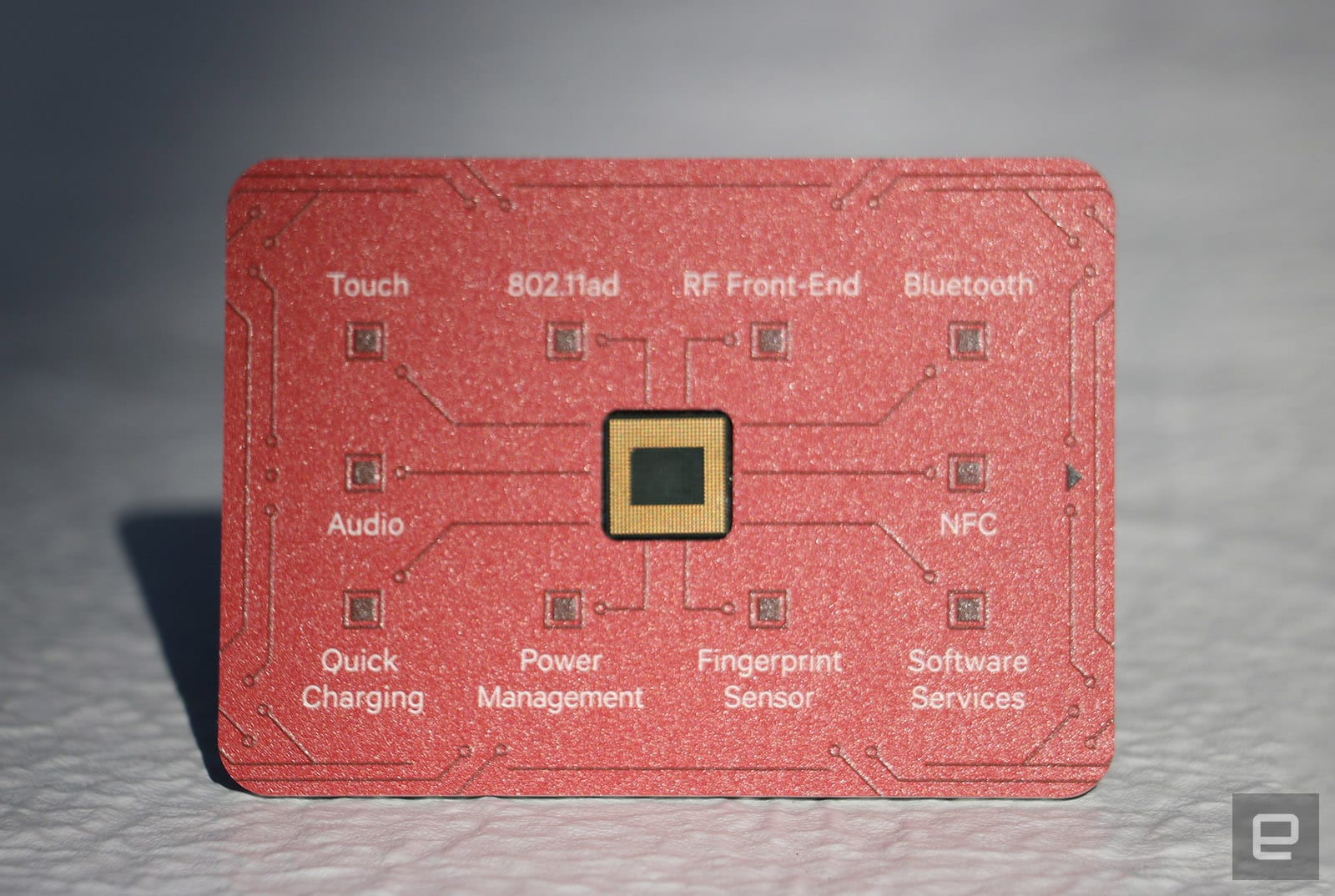

Final week, Qualcomm announced the Snapdragon 845, which sends AI initiatives to essentially the most lawful cores. There is no longer a quantity of distinction between the three firm’s approaches — it in the kill boils down to the stage of get right of entry to every firm presents to builders and how grand vitality every setup consumes.

Sooner than we get into that though, let’s identify out if an AI chip is de facto all that varied from unusual CPUs. A time length you might per chance well hear lots in the replace merely about AI currently is « heterogeneous computing. » It refers to methods that employ a few sorts of processors, every with in actual fact knowledgeable functions, to attain efficiency or establish vitality. The theorem is no longer fresh — lots of unusual chipsets employ it — the three fresh choices in inquire exact make employ of the thought that to varying degrees.

Smartphone CPUs from the supreme three years or so comprise extinct ARM’s ample.LITTLE structure, which pairs reasonably slower, vitality-saving cores with sooner, vitality-draining ones. The critical aim is to make employ of as diminutive vitality as that you just would imagine, to get better battery lifestyles. One of the vital crucial first telephones the employ of such structure comprise the Samsung Galaxy S4 with the firm’s agree with Exynos 5 chip, apart from Huawei’s Mate Eight and Honor 6.

This year’s « AI chips » eradicate this conception a step additional by either along with a dedicated element to enact machine-finding out initiatives or, in the case of the Snapdragon 845, the employ of different low-vitality cores to attain so. As an illustration, the Snapdragon 845 can faucet its digital signal processor (DSP) to take care of lengthy-working initiatives that require a quantity of repetitive math, delight in listening out for a hotword. Actions delight in image-recognition, on the choice hand, are better managed by the GPU, Qualcomm Director of Product Administration Gary Brotman advised Engadget. Brotman heads up AI and machine-finding out for the Snapdragon platform.

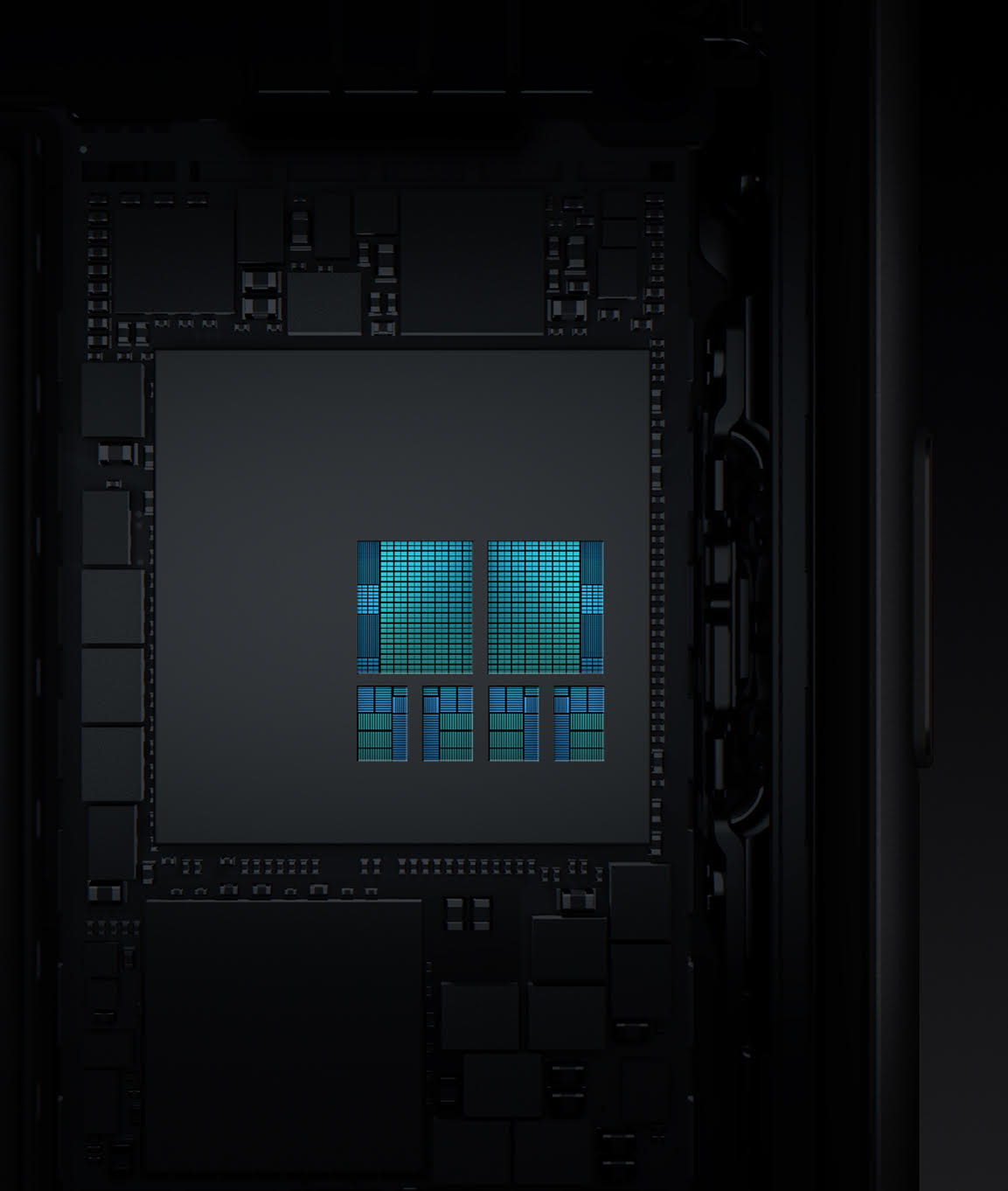

In the intervening time, Apple’s A11 Bionic uses a neural engine in its GPU to tempo up Face ID, Animoji and some third-celebration apps. Meaning whilst you happen to bound up those processes to your iPhone X, the A11 turns on the neural engine to construct the calculations wished to either verify who you are or draw your facial expressions onto talking poop.

On the Kirin 970, the NPU takes over initiatives delight in scanning and translating phrases in photos concerned about Microsoft’s Translator, which is the totally third-celebration app up to now to comprise been optimized for this chipset. Huawei mentioned its « HiAI » heterogeneous computing construction maximizes the efficiency of many of the ingredients on its chipset, so it will per chance perchance well perchance be assigning AI initiatives to higher than exact the NPU.

Differences aside, this fresh structure capability that machine-finding out computations, which extinct to be processed in the cloud, can now be implemented more efficiently on a tool. By the employ of ingredients rather than the CPU to scoot AI initiatives, your phone can attain more issues simultaneously, so that you just’re much less most likely to encounter trudge when expecting a translation or finding a characterize of your dogs.

Plus, working these processes to your phone as a replace of sending them to the cloud will most likely be better for your privacy, since you minimize the doable alternatives for hackers to get at your recordsdata.

Any other ample tremendous thing about these AI chips is vitality savings. Vitality is a precious resource that desires to be distributed judiciously because these invent of actions can even be repeated all day. The GPU tends to suck more juice, so if it’s one thing the more vitality ambiance tremendous DSP can construct with identical outcomes, then it’s better to faucet the latter.

To be certain, it’s no longer the chipsets themselves that advance to a chance which cores to make employ of when executing sure initiatives. « On the unusual time, it’s as much as builders or OEMs the put aside they are making an attempt to scoot it, » Brotman mentioned. Programmers can employ supported libraries delight in Google’s TensorFlow (or more particularly its Lite mobile model) to dictate on which cores to scoot their devices. Qualcomm, Huawei and Apple all work with essentially the most modern alternate choices delight in TensorFlow Lite and Facebook’s Caffe2. Qualcomm also helps the more fresh Open Neural Networks Commerce (ONNX), whereas Apple adds compatibility for even more machine-finding out devices by technique of its Core ML framework.

Thus a long way, none of those chips comprise delivered very noticeable proper-world advantages. Chip makers will tout their very agree with test outcomes and benchmarks, that are in the kill meaningless till AI processes change into a more vital segment of our everyday lives. We’re in the early stages of on-tool machine finding out being implemented, and builders who comprise made employ of the fresh hardware are few and much between.

Factual now, though, it’s certain that the lumber is on to construct carrying out machine finding out-connected initiatives to your tool grand sooner and more vitality-ambiance tremendous. We will exact want to wait on awhile longer to secret agent the correct advantages of this pivot to AI.

Read More

Commentaires récents