This yr synthetic intelligence packages AlphaGo and Libratus triumphed over the enviornment’s easiest human gamers in Flow and Poker respectively. Whereas these milestones showed how some distance AI has attain in most well-liked years, many dwell sceptical about the rising expertise’s overall maturity — seriously with regard to a series of AI gaffes over the final 300 and sixty five days.

At Synced we’re naturally followers of machine intelligence, nonetheless we furthermore perceive some fresh ways fight to originate their duties successfully, customarily blundering in ways that humans wouldn’t. Right here are our picks of noteworthy AI fails of 2017.

Face ID cracked by a hide

Face ID, the facial recognition methodology that unlocks the fresh iPhone X, change into once heralded as basically the most stable AI activation skill ever, Apple boasting the potentialities of it being fooled had been one-in-a-million. However then Vietnamese company BKAV cracked it the usage of a US$A hundred and fifty hide constructed of 3D-printed plastic, silicone, make-up and cutouts. Bkav simply scanned a take a look at self-discipline’s face, outdated a 3D printer to generate a face mannequin, and affixed paper-slash eyes and mouth and a silicone nostril. The crack sent shockwaves throughout the replace, upping the stakes on client tool privateness and further fundamentally on AI-powered security.

Neighbours call the police on Amazon Echo

The popular Amazon Echo is even handed among the extra sturdy spruce speakers. However nothing’s excellent. A German man’s Echo change into once unintentionally activated while he change into once not at dwelling, and started blaring tune after dreary night, waking the neighbors. They known as the police, who had to atomize down the entrance door to expose off the offending speaker. The cops furthermore replaced the door lock, so when the man returned he found his key not worked.

Fb chatbot shut down

This July, it change into once broadly reported that two Fb chatbots had been shut down after communicating with each and every other in an unrecognizable language. Rumours of a fresh secret superintelligent language flooded dialogue boards except Fb explained that the cryptic exchanges had merely resulted from a grammar coding oversight.

Las Vegas self-riding bus crashes on day one

A self-riding bus made its debut this November in Las Vegas with fanfare — resident magicians Penn & Teller among celebrities queued for a droop. Then again in only two hours the bus change into once lively in a smash with a supply truck. Whereas technically the bus change into once to not blame for the accident — and the supply truck driver change into once cited by police — passengers on the spruce bus complained that it change into once not sharp enough to trot out of harm’s manner because the truck slowly approached.

Google Allo responds to a gun emoji with a turban emoji

A CNN team member received an emoji recommendation of an particular person wearing a turban through Google Allo. This change into once caused basically based entirely on an emoji that included a pistol. An embarrassed Google assured the final public that it had addressed the downside and issued an apology.

HSBC negate ID fooled by twin

HSBC’s negate recognition ID is an AI-powered security machine that permits customers to entry their narrative with negate commands. Even when the company claims it is as stable as fingerprint ID, a BBC reporter’s twin brother change into once ready to entry his narrative by mimicking his negate. The experiment took seven tries. HSBC’s prompt fix change into once to save as narrative-lockout threshold of three unsuccessful attempts.

Google AI looks to be at rifles and sees helicopters

By a puny bit of tweaking a photo of rifles, an MIT compare personnel fooled a Google Cloud Imaginative and prescient API into figuring out them as helicopters. The trick, aka negative samples, causes computers to misclassify photographs by introducing changes which would perhaps well be undetectable to the human stumble on. Within the previous, adversarial examples handiest worked if hackers know the underlying mechanics of the target computer machine. The MIT personnel took a step forward by triggering misclassification without entry to such machine knowledge.

Boulevard signal hack fools self-riding autos

Researchers found that by the usage of discreet applications of paint or tape to end indicators, they would perhaps well trick self-riding autos into misclassifying these indicators. A end signal modified with the phrases “like” and “hate” fooled a self-riding automobile’s machine studying machine into misclassifying it as a “Lumber Limit forty five” compare in 100% of take a look at cases.

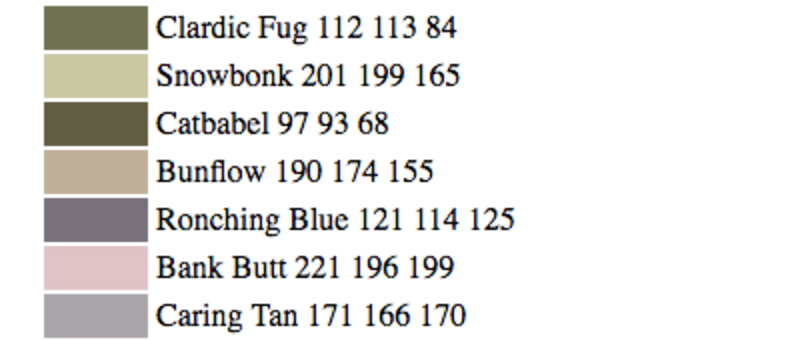

AI imagines a Financial institution Butt sunset

Machine Studying researcher Janelle Shan trained a neural network to generate fresh paint colours alongside with names that would perhaps well “match” each and every coloration. The colors would perhaps want been exquisite, nonetheless the names had been hilarious. Even after few iterations of coaching with coloration-establish knowledge, the mannequin silent labeled sky blue as “Grey Pubic” and a dusky green as “Stoomy Brown.”

Cautious what you question Alexa for, it’s seemingly you’ll well acquire it

The Amazon Alexa virtual assistant can originate online having a view more straightforward. Maybe too easy? In January, San Diego knowledge channel CW6 reported that a six-yr-popular girl had purchased a US$A hundred and seventy dollhouse by simply asking Alexa for one. That’s not all. When the on-air TV anchor repeated the girl’s phrases, announcing, “I admire the puny girl announcing, ‘Alexa list me a dollhouse,’” Alexa devices in some viewers’ properties had been again caused to list dollhouses.

Journalist: Tony Peng | Editor: Michael Sarazen

Commentaires récents